In everyday life, it's a no-brainer for us to grab a cup of coffee from the table. We seamlessly combine multiple sensory inputs such as sight and touch in real-time without even thinking about it. However, recreating this in artificial intelligence (AI) is not quite as easy.

A group of researchers at Tohoku University have created a new approach that integrates visual and tactile information to manipulate robotic arms, while adaptively responding to the environment. Compared to conventional vision-based methods, this approach achieved higher task success rates. These results represent a significant advancement in the field of multimodal physical AI.

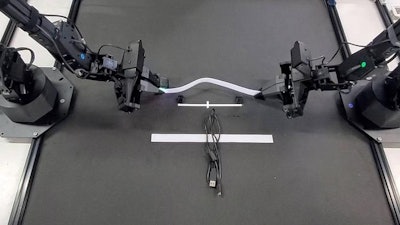

Machine learning can be used to support artificial intelligence to learn human movement patterns, enabling robots to autonomously perform daily tasks such as cooking and cleaning. For example, ALOHA (A Low-cost Open-source Hardware System for Bimanual Teleoperation) is a system developed by Stanford University that enables the low-cost and versatile remote operation and learning of dual-arm robots. Both hardware and software are open source, so the research team was able to build upon this base.

However, these systems mainly rely on visual information only. Therefore, they lack the same tactile judgements a human could make, such as distinguishing the texture of materials or the front and back sides of objects. For example, it can be easier to tell which is the front or back side of Velcro by simply touching it instead of discerning how it looks. Relying solely on vision without other input is an unfortunate weakness.

Mitsuhiro Hayashibe, a professor at Tohoku University's Graduate School of Engineering, said, "To overcome these limitations, we developed a system that also enables operational decisions based on the texture of target objects - which are difficult to judge from visual information alone. This achievement represents an important step toward realizing a multimodal physical AI that integrates and processes multiple senses such as vision, hearing, and touch - just like we do."

The new system was dubbed "TactileAloha." Researchers found that the robot could perform appropriate bimanual operations even in tasks where front-back differences and adhesiveness are crucial, such as with Velcro and zip ties. By applying vision-tactile transformer technology, their Physical AI robot exhibited more flexible and adaptive control.

The improved physical AI method was able to accurately manipulate objects, by combining multiple sensory inputs to form adaptive, responsive movements. There are nearly endless possible practical applications of these types of robots to lend a helping hand. Research contributions such as TactileAloha bring us one step closer to these robotic helpers becoming a seamless part of our everyday lives.