As manufacturers continue to increase plant automation, virtualization is gaining traction as a way to control costs, increase efficiencies, and drive better long-term planning. Virtualization, the practice of using a software layer to let one physical computing server run multiple applications on virtual machines, enables manufacturers to maximize their return on hardware investments while conserving precious real estate on the plant floor. With manufacturers implementing more and more software applications across their operations, virtualization is typically a smart move—provided you make the right technology choices up front.

In an “always-on” manufacturing environment where profit margins are tight, IT resources are limited and continuous availability is critical, it’s important that you evaluate your options carefully before virtualizing applications. Why? While many virtualization approaches offer high availability, few deliver continuous availability. In addition, some can actually increase cost and complexity, cancelling out many of the benefits you hoped to achieve from virtualization in the first place. But with insight into the different virtualization approaches, you can realize the benefits while avoiding the risks.

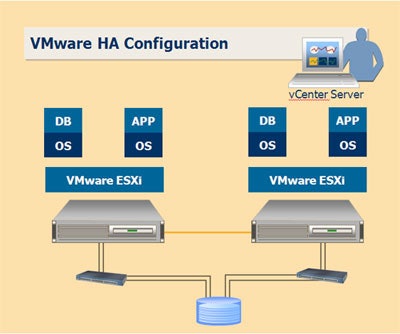

When it comes to virtualizing manufacturing applications, there are a number of approaches you can take. Failover solutions like VMware HA and Microsoft HyperV running on commodity servers are a popular choice. While these tools have been proven to deliver high application availability, they cannot fully protect the host server or hypervisor against unplanned downtime or data loss. If hardware problems occur, these solutions perform a restart or failover, which can take several minutes or longer depending on the size of the system. What’s more, any data uncommitted to disk at the time of the crash is lost and cannot be recovered.

An alternative approach is to consolidate your virtual machines running on a fault-tolerant server with redundant hardware. Some fault-tolerant servers on the market today feature duplex hardware components that process the same instructions at precisely the same time — in lock step — to deliver continuous application availability. If one component fails, its partner simply continues normal operations — with zero downtime or data loss. Lockstep processing ensures that errors, are detected and that the system can survive a CPU failure without interrupting processing, losing data, or compromising performance.

Then there’s the issue of cost. Failover solutions are typically deployed on a minimum of two servers and require a separate storage area network (SAN), switches, and multiple software licenses — all of which can drive up purchase and installation expenses as well as support and administration costs. Add in the cost of potential downtime and these solutions may be more expensive than they appear at first glance.

Configuring your virtualized environment on a fault-tolerant server is often a more cost-effective approach. In the old days, fault-tolerant servers were more expensive than failure/recovery alternative solutions, but virtualization often makes the price comparable or even less expensive. Because fault-tolerant servers include replicated hardware components — CPUs, chipsets, and memory — they eliminate the need for a second server, another copy of the operating system, duplicate application licenses, redundant switches, and external storage. A simpler configuration with fewer components means lower costs —both upfront and throughout the lifecycle of your virtualized environment.

| A Fault Tolerant server is more cost effective because you don’t need vCenter Server, multiple licenses, a second server, redundant switches and external storage. |

Depending on the size and structure of your manufacturing facility, chances are that IT support is in short supply. If this is the case, you have to beware of virtualization solutions that add significant operational complexity to your computing environment. Because failover solutions are deployed in clustered server configurations with multiple switches and external storage, they require specialized IT expertise to implement, administer, and maintain. After all, cluster capacity, policies, resources, and software changes must all be managed and tested on an ongoing basis to validate proper operation.

Set up of a fault-tolerant server is relatively simple—more or less the same as setting up a general-purpose server. Plus, because they use a single copy of the operating system and a single copy of any standard version of each application, fault-tolerant servers are easier to manage and support over time — a real plus when your IT resources are non-existent or stretched too thin.

If your manufacturing organization is thinking about virtualizing applications, it’s in your best interest to do your homework upfront and thoroughly compare and contrast available virtualization approaches. A smart approach is to look at a detailed Total Cost of Ownership (TCO) analysis that factors in purchase and installation, support and administration, and costs of downtime. It also helps to take into consideration the expertise and bandwidth of your IT resources and how much operational complexity they can realistically support. And finally, you should think about your organization’s tolerance for downtime and whether high availability or continuous processing can better meet your needs. The virtualization technology you select can have a significant impact on your operations, your output, and your bottom line. Choose carefully.

Frank Hill is the director of manufacturing business development for Stratus Technologies. Prior to joining Stratus, Mr. Hill led MES business development at Wyeth, Merck & Co., and AstraZeneca for Rockwell Automation. He has also worked in manufacturing leadership at Merck & Company. He is a frequent speaker and commentator on technology-enabled manufacturing solutions.