For businesses that rely on scientific innovation to differentiate themselves, being first to market with a novel, more effective or attractively priced product is the Holy Grail — a chance to “own” the customer base before competitors catch up. But as the lifecycle that transforms a good idea into a viable product grows ever more complex, both in relation to the science that drives innovation and the increasingly global and extended nature of the research-develop-manufacture value chain, the very factors critical to success are suffering.

Data overload, siloed information systems, disjointed processes and a lack of transparency plague the many activities that contribute to product development and commercialization, leading to delays, errors, rework and cost overruns that negatively impact time-to-market, compliance and profitability. In fact, according to IDC Manufacturing Insights[1], only 25 percent of R&D projects ultimately result in new products.

To improve the performance of their efforts, today’s organizations need to take a much more streamlined and holistic approach to managing both data and processes across the end-to-end innovation lifecycle, from early research at the atomistic and molecular level all the way up to manufacturing.

Data, Data Everywhere

Information management and access — particularly at those crucial hand-offs between research, development, and manufacturing — is one activity that’s especially susceptible to productivity gaps during the innovation lifecycle. Modern R&D involves enormous amounts of data from a variety of sources: Information generated from lab experiments, modeling and simulation, QA/QC test results, historical findings, and more all contribute to the knowledge that drives the creation of new products.

This data should be an asset to multiple stakeholders across the organization — imagine the efficiency gains that could be realized if plant managers had immediate knowledge of compliance or production issues uncovered during sample testing. But all too often, project contributors can’t take full advantage of valuable information because large swaths of it are inaccessible beyond departmental, disciplinary, or system “silos.”

Why? Increasing organizational complexity is one reason. As more and more businesses outsource core components of their product development and manufacturing activities, critical information becomes scattered across a global and highly-fragmented extended enterprise. Today, for example, it’s not uncommon for manufacturing companies across all industries to work with contract research facilities, dozens of external factories, and a long list of ingredient suppliers — all based in different locations around the world.

The research team may be thousands of miles away from manufacturing operations, yet a single discovery in the lab (like the impact of temperature on a compound’s stability) can have a huge impact on later stage activities, such as processing or the selection and calibration of plant equipment. The problem is that these specialized contributors end up isolating their data in proprietary systems and applications — a laboratory notebook here, a laboratory information management system (LIMS) database there — making it difficult to share, collate, analyze, and report on.

Secondly, not only have traditional information management approaches failed to pace with externalization trends, they are also struggling with the ever more sophisticated nature of scientific research. During the course of product development, data that needs to be accessed and analyzed may include unstructured text, images, 2- and 3-dimensional models, and more, and may be generated by a host of advanced software systems, laboratory equipment, sensors, instruments, and devices. Yet, the technologies used to capture and share this information are often woefully behind the times.

For instance, paper lab notebooks are still common, as is the need for human intervention to transfer data beyond a single department or disciplinary group. On the manufacturing floor, formulation recipes and SOPs are often paper-based as well, with operators recording the batch record data manually and then re-keying the record into other systems. As a result, project collaborators can spend hours searching files, cutting-and-pasting needed information together. Or they enlist IT resources to hand-code customized “point-to-point” connections to move data between systems and applications, especially between the systems that are used in R&D and the product lifecycle management (PLM) and enterprise resource planning (ERP) systems. Unfortunately, these ad-hoc attempts at data capture and integration are time-consuming, expensive, and prone to error.

A Unified Approach To Scientific Informatics

What’s needed is a single, unified, enterprise-class informatics framework that allows organizations to electronically integrate diverse information silos, make data more accessible to all stakeholders and move the product more efficiently through the research-development-manufacture pipeline. And thanks to the evolution of service-oriented architecture and the use of web services, an enterprise-level approach to scientific informatics is now possible.

Web services can, for example, be used to support the integration of multiple data types and scientific applications, without requiring customized (and costly) IT intervention. As data previously scattered throughout the organization is electronically captured and made useful through a single platform, information can be utilized by all of the contributors thereby speeding innovation cycle times — no matter where, when, or how it was generated.

For example, data from real commercial operations can be used to help direct R&D teams to better define product and process conditions for improved technology transfer and operational efficiency. Toxicologists can make their history of assay results available to formulators developing recipes for a new cosmetic, or chemists can work more closely with sourcing experts to ensure the compounds they are developing in the lab are actually viable candidates for large-scale production. Most important, those critical-yet-problematic data hand-offs between functional areas (the transfer of important R&D data to downstream PLM and ERP systems) can be automated, reducing the need for manual human intervention.

Today there are a number of technologies that can help organizations better capture and leverage scientific data and improve operations, such as chemical and biological registration systems or multi-disciplinary electronic lab notebooks (ELNs). Services-based informatics platforms are specifically designed to help organizations build a more integrated and holistic information environment across the entire innovation lifecycle.

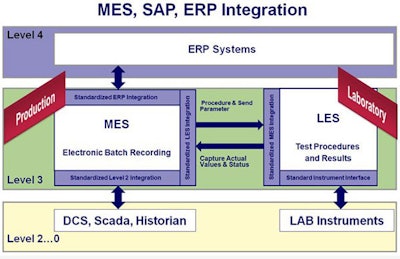

With these types of solutions in place, organizations will be better equipped to deal with the data access and sharing challenges that are becoming increasingly problematic as innovation activities become more and more specialized, sophisticated and distributed. For example, a QC Lab Execution System (LES) with direct interfaces to LIMS, PLM, and ERP systems further streamlines the “right-first-time” needs of manufacturing.

Data capture and information accessibility is one part of the equation. A consistent, reliable, and highly optimized process strategy is the other. The processes that guide things like experimental execution or sample testing are complex and often involve multiple steps that need to be continually measured and validated. Organizations want to be sure that the processes they deploy are efficient and accurate, and that problems can be spotted and addressed quickly — before a defective sample batch shuts down a production line, for instance.

The principle of Quality by Design (QbD) depends on this kind of “at line” level of control. If organizations can identify stability, efficacy, or safety issues early in the innovation lifecycle, the costs incurred by delays and rework can be minimized or even eliminated. For example, if critical data related to product quality is monitored in as close to real-time as possible, as well as linked to the manufacturing process parameters, it can be used to direct immediate changes in manufacturing conditions to assure improved quality conformance. This is why process studies conducted during development are critical to operational excellence during commercial operations.

To improve process quality, more and more companies across industries are starting to implement process standards guiding procedure development and execution across the innovation lifecycle. What does this mean? It means that organizations test their procedures before executing them to make sure that they are valid, rugged, and reliable. Once validated, they are capturing processes as best practices in an effort to reduce the variable outcomes that result from process deployment. And they are beginning to leverage informatics technologies to automate workflows and take as many manual steps out of process execution as possible.

Consider the experience of a large pharmaceutical company who identified a bottleneck where they needed to do high volume sample testing of active pharmaceutical ingredients and drug products, as well as to determine compliance with specifications. A small dedicated workforce was responsible for running approximately 20 different techniques and capturing the results in paper lab notebooks. Testing output was subject to manual data entry and transcription errors, so each step in the process required constant “double checking,” slowing cycle time, and taxing limited lab resources. Furthermore, results were often variable due to inconsistent test execution.

To address these challenges and help speed the cycle times involved in moving drug products out of development, the company deployed a lab execution system that enables it to define, validate, and control testing procedures electronically. With the system in place, lab managers can automate routine workflows that were previously handled manually and quickly identify potential issues “at line,” rather than later downstream.

The system additionally captures testing results directly from lab equipment and instruments, eliminating the need for manual transcription and data entry. Greater process automation and broader data integration means fewer errors, which reduces rework and compliance risk while increasing process efficiency. Since deploying the solution, the company’s quality control laboratories have recorded an approximate 20 percent gain in productivity in comparison to using a paper-based system, with up to 50 percent improvement for some individual procedures.

This example demonstrates how the creation of high quality, consistent process standards — and the deployment of technology to support enterprise-wide process execution — can help organizations close product lifecycle productivity gaps that are largely avoidable with better control. Beyond productivity improvements, capturing procedure workflows and related data also has another benefit — it empowers organizations to continuously improve their process standards.

Consider the insight that could be gained by applying modeling and simulation technology to the last six months or year of process data spanning research, development, and manufacturing. Over time, organizations will be able to identify patterns that lead to both desirable and undesirable outcomes, and apply algorithms that will enable them to predict what might happen if a new variable is introduced to a process.

For instance: How will the production line be impacted if we need to replace X ingredient with Y ingredient? How does speeding up or slowing down a specific processing step impact sample quality? What will happen if we reduce testing lab resources by 25 percent? As the saying goes, “you can’t manage what you don’t measure.” Better, more integrated data management enables better measurement and analysis, and better measurement and analysis leads to better process quality. It’s all connected.

Businesses that seek to improve manufacturing and operational excellence by optimizing their investments in scientific innovation must find ways to manage it more efficiently and consistently. This will require making fundamental changes to how people, processes, technology, and data are aligned across the innovation and product lifecycles. True productivity and quality gains will only be realized when data and processes can move bi-directionally across both upstream and downstream activities — from early research to manufacturing — as seamlessly as possible. Attention to more holistic informatics and consistent process execution today will lead to faster, more profitable, and repeatable innovation tomorrow.

[1] IDC Manufacturing Insights: Accelerating Science-Led Innovation for Competitive Advantage

About the author

Ken Rapp is managing director for Accelrys’ Analytical, Development, Quality and Manufacturing (ADQM) business segment and recently served as President and CEO of VelQuest, which was acquired by Accelrys in January 2012. He co-founded VelQuest in 1999 to help pharmaceutical manufacturers close productivity gaps during product development and bring new drugs to market faster with innovative, compliance-focused procedure execution management for lab and manufacturing operations.